For robots to move beyond pre-programmed routines and interact intelligently with the unpredictable real world, they need a richer understanding of their environment. This is where visuo-tactile fusion comes in—a sophisticated approach that combines visual data with high-resolution touch feedback to create a comprehensive perception model. By integrating a robotic tactile sensor with camera systems, robots can perceive properties like texture, compliance, and slip that are invisible to vision alone. Companies like Daimon are advancing this field by developing sensors that provide the precise, multi-dimensional data required for true sensor fusion, enabling breakthroughs in both industrial automation and service robotics.

Daimon Robotic Tactile Sensor Integration

Integrating a robotic tactile sensor into a robotic system is a foundational step toward achieving visuo-tactile fusion. This process involves more than just physical mounting; it requires synchronizing the sensor’s data stream with visual frames and processing the combined information in real-time. The sensor captures detailed contact mechanics—local pressure distribution, micro-vibrations, and force vectors—which are then spatially mapped onto the object’s visual model. This allows the robot to correlate what it sees with what it feels, creating a unified perception. For instance, a visually identified object can be confirmed and characterized by its tactile signature, enabling more reliable grasping and manipulation, especially for delicate or variable items in logistics and electronics assembly.

Vision and Touch for Robotic Systems

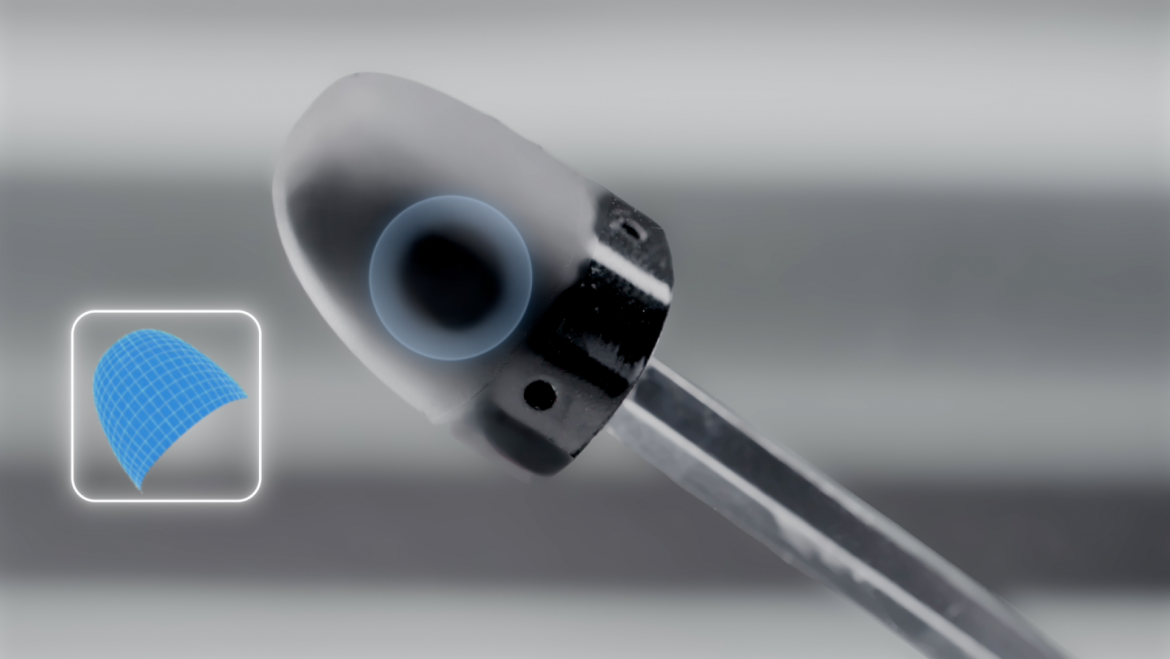

Vision provides a broad, contextual understanding of a scene, while touch offers intimate, local details. In robotic systems, visuo-tactile fusion leverages the strengths of both: vision guides the hand to an object, and touch refines the interaction. When a robot equipped with an optical tactile sensor, like the DM-Tac F series from Daimon, makes contact, the sensor captures intricate deformation fields and 3D force data. This tactile feedback can instantly correct for visual errors, such as a slightly misplaced grasp, or detect an impending slip that cameras cannot see. Daimon’s sensors, with their 30Hz sampling and full finger coverage, provide the rapid, detailed data necessary for this closed-loop control, making tasks like precision assembly and adaptive bin-picking significantly more robust.

Multisensory Perception in Daimon Robotics

Daimon’s approach to multisensory perception focuses on creating a seamless data pipeline from sensor to decision-making algorithm. Their DM-Tac FM and FS sensors are engineered to deliver more than just basic contact detection; they provide a rich dataset including contact topography, 3D force, and deformation fields across the entire fingertip area. This multi-parameter output is crucial for advanced perception tasks like material hardness identification and slip detection. By fusing this high-fidelity tactile data with stereo or depth camera input, Daimon enables robots to perform complex evaluations—such as assessing the ripeness of fruit or ensuring a secure grip on a tool—bridging the gap between simple automation and truly intelligent, adaptive manipulation.

Conclusion

Visuo-tactile fusion represents a significant leap forward in robotic perception, moving machines closer to human-like interaction with their surroundings. It solves critical challenges in manipulation reliability and environmental understanding by allowing robots to “see” with their fingers. The success of this approach hinges on the quality and integration of tactile sensing technology. Through its development of compact, high-performance optical tactile sensors, Daimon provides the essential hardware and data foundation that makes sophisticated multisensory perception a practical reality for both industrial and research applications.