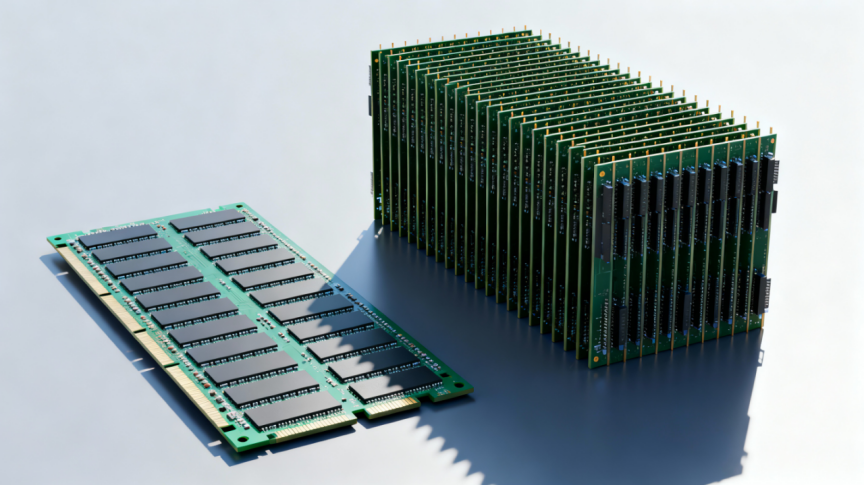

In the high-stakes arena of computational throughput, selecting the right memory architecture is critical. While the foundational comparison of gddr vs ddr highlights the split between CPU latency optimization and GPU bandwidth prioritization, the cutting-edge battle lies between the raw frequency of GDDR and the massive parallelism of HBM. GDDR utilizes high clock speeds and standard packaging to deliver cost-effective performance for consumer graphics, whereas HBM leverages vertical stacking and wide interfaces to achieve superior power efficiency and throughput for AI and data centers. This guide dissects the physical layer differences, signal integrity challenges, and architectural trade-offs to determine the optimal solution for your workload.

Core Architectural Divergence: Frequency vs Parallelism

This section examines the fundamental engineering split between maximizing serial data rates on planar topologies and leveraging vertical integration to overcome physical interconnect limitations.

The Serial vs. Parallel Throughput Dilemma

The primary distinction between these architectures lies in how they achieve bandwidth.GDDR strategies focus on aggressive clocking, pushing pin speeds to 24 Gbps and beyond in GDDR6X. This approach suits workloads requiring rapid burst access but demands significant power to maintain signal integrity over PCB traces. Conversely, HBM (High Bandwidth Memory) adopts a “wide and slow” philosophy. By utilizing a 1024-bit interface per stack—significantly wider than the 32-bit channels typical in ddr vs gddr comparisons—HBM achieves terabyte-scale bandwidth at lower clock speeds, reducing the energy penalty of high-frequency switching.

Signal Integrity and Packaging Constraints

Physical implementation defines the upper limits of performance. GDDR relies on traditional PCB routing, where signal degradation from crosstalk and insertion loss becomes a major hurdle as frequencies rise. Engineers must employ complex equalization techniques (like PAM4 signaling) to mitigate these effects. HBM circumvents this by using Through-Silicon Vias (TSVs) and silicon interposers to connect memory die directly to the processor. This 2.5D packaging shortens the data path to millimeters, drastically improving signal quality and enabling the density required for AI training clusters, albeit at a higher manufacturing complexity.

Performance Metrics and Economic Trade-offs

Evaluating the ddr vs gddr vs hbm hierarchy requires a balanced analysis of bandwidth-per-watt efficiency against the harsh realities of manufacturing yields and deployment costs.

Bandwidth-per-Watt Efficiency Metrics

For data center architects, power efficiency is often the deciding factor. HBM demonstrates superior bandwidth-per-watt performance because it resides on the same package as the GPU/ASIC, minimizing the energy required to drive signals. For instance, an HBM3 system can deliver over 819 GB/s of bandwidth while consuming significantly less power per bit transferred compared to a GDDR6 subsystem attempting to match that throughput. This thermal headroom is crucial for High-Performance Computing (HPC) environments where cooling budgets are maxed out, making HBM the preferred choice for energy-constrained heavy workloads.

Manufacturing Complexity and Economic Viability

While HBM wins on metrics, GDDR wins on economics. The reliance on CoWoS (Chip-on-Wafer-on-Substrate) and complex TSV processes makes HBM supply chains fragile and expensive, often costing 3x to 4x more per bit than GDDR. In contrast, GDDR benefits from mature, high-yield manufacturing processes compatible with standard PCBs. For consumer graphics cards and workstations where gddr vs ddr which is better discussions often favor GDDR for its balance of speed and cost, the premium of HBM is unjustifiable. Consequently, GDDR remains the dominant standard for cost-sensitive applications requiring high bandwidth without the logistical hurdles of advanced packaging.

UniBetter: Your Trusted Partner for Electronic Component Procurement

UniBetter excels in navigating the complex semiconductor supply chain, offering robust solutions for sourcing high-performance memory and critical integrated circuits.

As a leading independent distributor ranked 21st globally, UniBetter does not manufacture chips but specializes in the professional procurement and distribution of electronic components. The company leverages its proprietary CSD Quality Management System—integrating Component Source Discovery, 3-level quality testing, and dynamic supplier management—to ensure every part distributed is 100% authentic. With access to over 7,000 reliable suppliers, UniBetter resolves shortage issues for hard-to-find or obsolete components that are critical for maintaining legacy infrastructure or scaling new AI hardware.

UniBetter distributes a wide range of electronic components, supporting clients through six key advantages: mitigating shortage supplies, providing turn-key procurement with 2-hour BOM quotes, delivering significant cost savings via global sourcing experts, managing obsolescence, offering franchised product support, and facilitating excess inventory resale. Whether your project demands the high-frequency capabilities of GDDR or the specialized components surrounding HBM architectures, UniBetter sources the necessary parts with AS6081 and ISO9001 certified reliability.

Assess your specific throughput requirements and power constraints today to source the memory architecture components that prevent system bottlenecks and maximize ROI.